Using NFS with Kubernetes Local Storage Provisioner

· 781 words · 4 minutes read

For a few months now I’ve been running a Raspberry Pi Kubernetes cluster at home, recently I wanted to have the ability to experiment with stateful things like a postgres database running in it. In order to do this generally you need a provisioner (in my case an NFS Provisioner), however with 1.10 you can now provision storage locally so I thought I would test using that for NFS storage and see if it was possible as I had some trouble getting the NFS Provisioner working.

The short answer is yes it is possible, but it requires a bit of setup to get it right. This will assume that you already have an NFS server and the correct ACLs in place so that your raspberry pi (or any other kubernetes node) has access. On the nodes you want access to this NFS share from you’ll need to edit /etc/fstab, in my case my fstab went from:

proc /proc proc defaults 0 0

PARTUUID=68751133-01 /boot vfat defaults 0 2

PARTUUID=68751133-02 / ext4 defaults,noatime 0 1

To

proc /proc proc defaults 0 0

PARTUUID=68751133-01 /boot vfat defaults 0 2

PARTUUID=68751133-02 / ext4 defaults,noatime 0 1

192.168.2.99:/volume1/k8s-storage /vol nfs auto,user,exec,rw,async,noatime 0 0

In my case I was mounting /volume/k8s-storage from 192.168.2.99 (my NFS server) to /vol so I created the /vol folder on each of my raspberry pis which I changed their fstab. A better option would have been to put it in /mnt but I wasn’t thinking about that at the time I created this. Besides nfs the rest of the options were guesses, I’m not a trained sysadmin so I recommend you read up on them before just copying/pasting what I did. Once you’re done setting up the mount points simply remount with “sudo mount -a”. Now if everything was done correctly when you run “df -h” there should be an entry for your NAS, like this:

pi@kube-runner-02:~ $ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 15G 7.2G 6.7G 52% /

devtmpfs 460M 0 460M 0% /dev

tmpfs 464M 0 464M 0% /dev/shm

tmpfs 464M 18M 446M 4% /run

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 464M 0 464M 0% /sys/fs/cgroup

/dev/mmcblk0p1 41M 22M 20M 53% /boot

192.168.2.99:/volume1/k8s-storage 2.7T 848G 1.9T 31% /vol

tmpfs 93M 0 93M 0% /run/user/1000

Now that the NFS server is mounted properly we can use it for local storage in kubernetes 1.10. Below is a sample yaml file for a PersistentVolume which I will break down the important pieces in line:

apiVersion: v1

kind: PersistentVolume

metadata:

name: psql-volume

spec:

capacity:

storage: 1Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage # There is a built in provisioner for this class

local:

path: /vol/postgres # a folder under where the NFS storage is mounted

nodeAffinity: # Node affinity is required for local storage

required:

nodeSelectorTerms:

- matchExpressions: # Limit the hosts to hosts that have it setup, in my case all of them

- key: kubernetes.io/hostname

operator: In

values:

- kube-controller-01

- kube-runner-01

- kube-runner-02When you create this Persistent Volume, you need to make sure that the “path” exists. If the directory in the path field doesn’t exist (in my case /vol/postgres) then when a Persistent Volume Claim tries to bind it you will get an error. Once that’s done all you should have to do is setup a StatefulSet and Persistent Volume Claim to take advantage of it. Here’s an example one I setup for Postgres:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

spec:

selector:

matchLabels:

app: postgres

serviceName: postgres

replicas: 1

template:

metadata:

labels:

app: postgres

spec:

terminationGracePeriodSeconds: 10

containers:

- name: postgres

image: arm32v7/postgres:10.3

ports:

- containerPort: 5432

name: psql

env:

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: pg-secrets

key: PG_PASSWORD

- name: POSTGRES_USER

valueFrom:

configMapKeyRef:

name: pg-config-data

key: pg.user

- name: POSTGRES_DB

valueFrom:

configMapKeyRef:

name: pg-config-data

key: pg.db

- name: POSTGRES_DATA

valueFrom:

configMapKeyRef:

name: pg-config-data

key: pg.data

volumeMounts:

- name: pg-data

mountPath: /var/lib/postgresql/data

volumeClaimTemplates:

- metadata:

name: pg-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "local-storage"

resources:

requests:

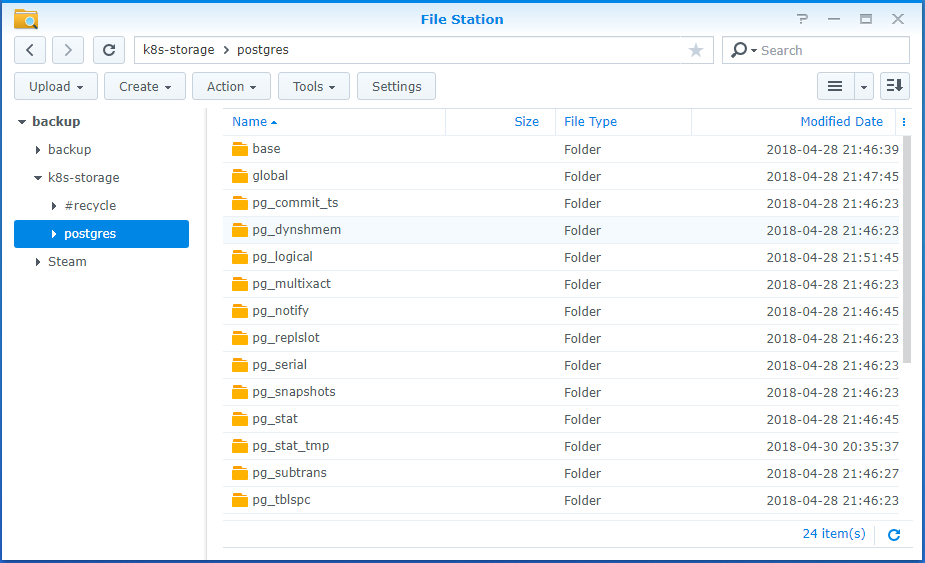

storage: 1GiAt this point once everything is applied you should see data showing up on your NFS storage and you can validate it by doing using kubectl port-forward and validating the data with psql. Here’s a screen shot of the contents of /volume1/k8s-storage/postgres from my NFS server that the above StatefulSet created:

The contents Postgres stored on my NFS share

Finally one word of warning. The local provisioner doesn’t cleanup so you will have to cleanup unused Persistent Volumes, Persistent Volume Claims, as well as the data on your NFS mount manually when you are done with it.

Thanks for reading, for all the kubernetes configs used please see this github repo.